AI Playground: 10 new ways to get hands on experience at work

🧠 Agentic browser actions in Perplexity Comet, Google Sheets AI function, MCP servers and Linear for status updates, mini apps for OKRs with Airtable. Get up to speed quickly. Knowledge Series #80.

🔒The Knowledge Series is available for paid subscribers. Get full ongoing access to 80+ explainers and AI tutorials to grow your technical knowledge at work. New guides added every month.

It’s been 6 months since the last AI playground back in February. Needless to say, a lot has changed since then.

If you’re new here, these AI playgrounds are a special type of Knowledge Series designed to bring you up to speed quickly with the latest AI features and functionalities by getting hands-on experience across a broad range of products.

In this playground, we’ll explore how to use new products and features like Perplexity’s AI browser Comet, Genspark’s generative AI spreadsheet builder and Google’s impressive video generation model Veo 3. Every use case is designed with product / tech teams in mind to make them as relevant as possible for use at work. This includes things like automatically creating lists of LinkedIn profiles of new candidates to hire that meet highly specific criteria, building customer testimonial videos, mini apps for OKRs and more.

Coming up, how to:

Audit user journeys and perform agentic actions with Perplexity Comet

Get insights from spreadsheets with the new AI function in Google Sheets

Create a customer testimonial video with Veo 3

Use Claude MCP servers and connectors to get status updates from items in Jira and Linear

Use custom built C-suite Google Gems to test your content before sharing it

Generate a PDF report on engineering process optimization with Grok

Perform competitor analysis and create tables of LinkedIn profiles with Genspark

Build a voice-first user onboarding assistant with ElevenLabs Conversations

Create a professional grade resume based on your LinkedIn profile in one prompt

Build a mini app for tracking OKRs with Airtable

1. Audit user journeys and perform agentic actions with Perplexity Comet

Perplexity has now officially released its new AI browser, Comet, with invites sent out for access to the beta last week. I’ve been playing around with it since then and despite some initial disappointing issues with page jank / stuttering that have mostly been ironed out, it still feels as though the browser is one of the best places to interact with AI on a daily basis.

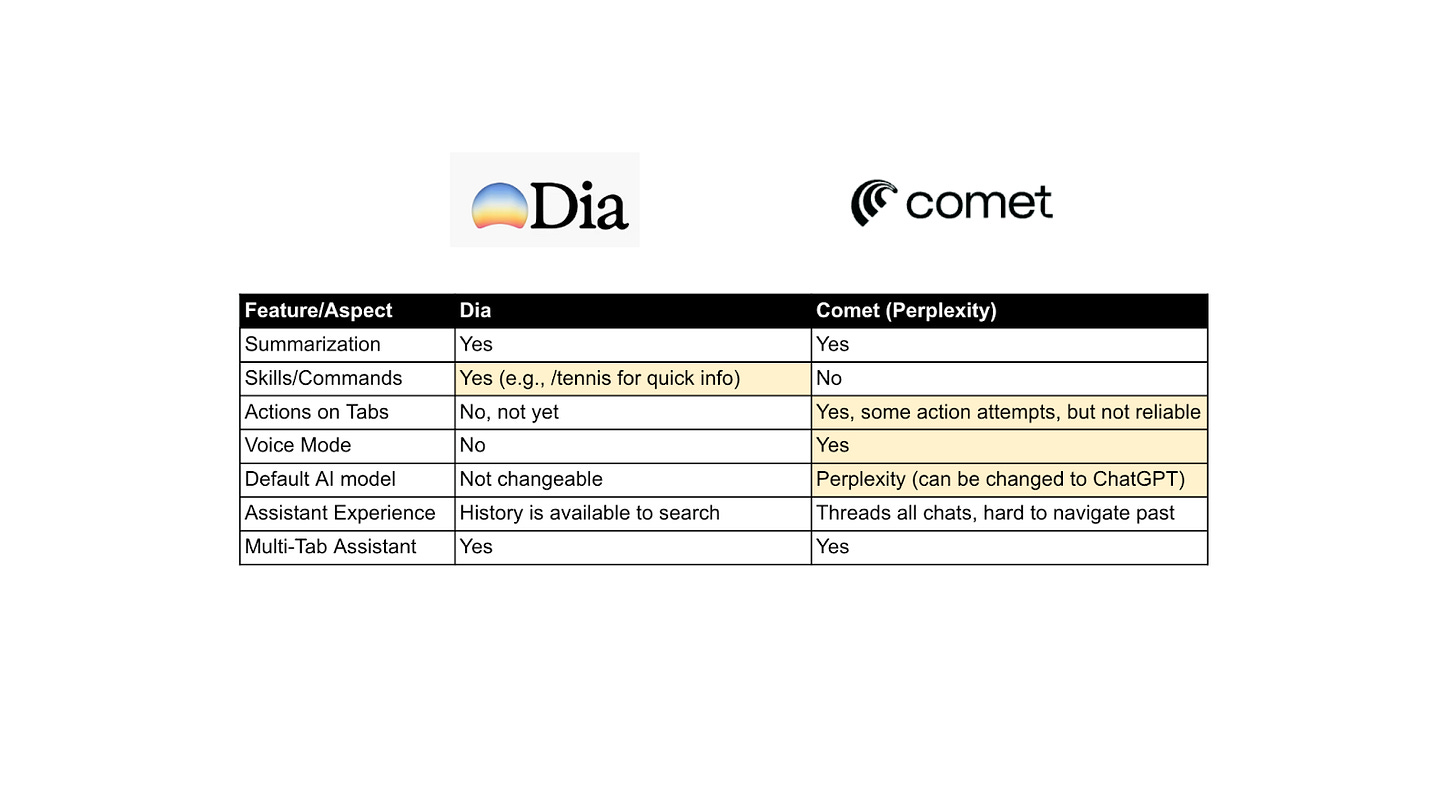

Here’s a snapshot of how Perplexity stacks up against another AI browser, Dia:

Both have similar capabilities like multi-tab assistants and AI summarization but while Dia has the ability to create custom skills, Perplexity’s Comet does have a major feature that Dia currently lacks: agentic actions.

This allows Comet to perform actions in the browser on your behalf. And for product teams, this could be particularly helpful for scenarios like usability testing or testing out specific user journeys. Imagine you’ve released a new payment journey and want to identify some potential sticking points for example, you could use Perplexity Comet to go through the journey and identify potential problems for review.

Example - using Perplexity Comet to test user journeys and pages

In this example, we’ll ask Perplexity Comet’s Assistant to visit Twilio’s homepage, sign up for an account, conduct a usability audit and provide us with a list of positives / negatives, along with recommended actions / next steps.

Here’s the prompt we can use for this:

Imagine you're a first time user visiting this site. try signing up for an account.

talk me through your thought process on the overall user experience. first, focus on the homepage and its usability and then the sign up process overall.

explain your thoughts and give me some suggested UX recommendations I can consider. When you ask Comet to perform actions it will automatically switch into its agentic action mode and start to visit websites / perform the necessary actions on your behalf.

Here’s a video of this process in Comet using Twilio’s homepage and registration flow:

In its usability recommendations, it suggests several improvements such as:

Showing a progress bar to allow users to see where they are in the overall registration process

Suggesting a quick demo mode for people who aren’t yet ready to sign up

Offer guidance for users who haven’t received their confirmation email e.g. “didn’t receive your email?”

This is just one way to use these actions of course but it’s a neat way to get introduced into some of the ways agents can perform actions on your behalf.

2. Get insights from spreadsheets with the new AI function in Google Sheets

Google Sheets recently added a new AI function that lets you embed generative AI prompts directly into Google Sheets.

The AI function in Sheets has 3 parts:

The function name (AI)

The prompt you want to use

The range you want to apply the function to

In this example, we can use the AI function to categorize feedback from users into positive or negative based on the sentiment in the qualitative feedback columns.

With the function applied, it will automatically categorize the content in your Sheets based on the sentiment. You could then select the sentiment and use the AI function again to ask it to help you draft emails or follow ups based on the content.

3. Create a customer testimonial video with Veo 3

After a rocky start Google is now leading the way across various generative AI applications - especially video.

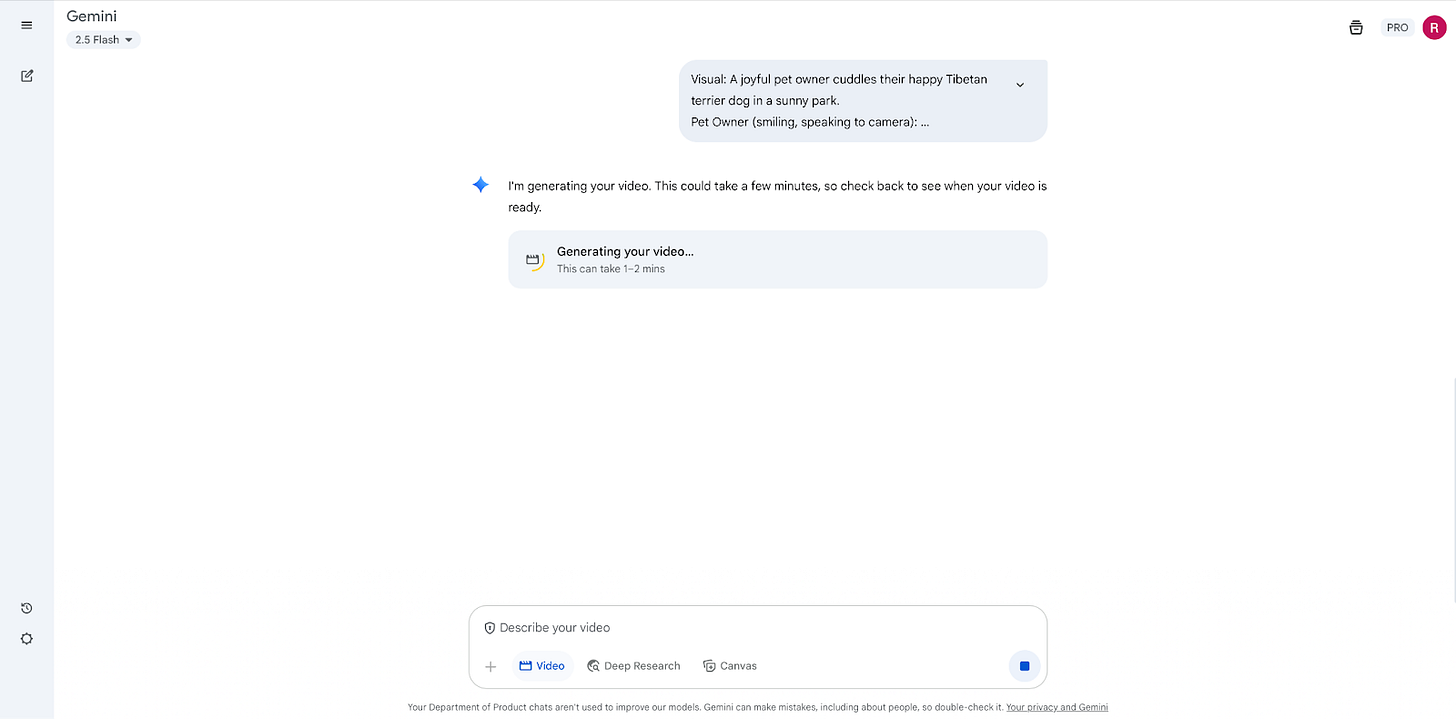

Veo 3 is the leading text-to-video generation model and for this example, we’re using the native Gemini web app which now comes with the ability to generate 8 second videos.

How to write video generation prompts

There are multiple ways to write video generation prompts but a solid video generation prompt will include one or more of the following:

Scene descriptions - defines the environment and atmosphere of the video, setting the stage for the narrative e.g. A tense scene in a dimly lit interrogation room at night, with flickering fluorescent lights

Main subjects - defines the focal point of the video, whether it’s a person, animal, object, or group, ensuring the AI prioritizes it e.g. A glamorous woman with a vintage 1950s hairstyle, gold jewelry, and a confident expression, lounging by a pool

Visual styles - defines the aesthetic look of the video, ensuring it aligns with your creative vision e.g. Cinematic style with a gritty urban texture, neon lights reflecting off wet pavement

Camera movement - Controls how the camera behaves to create a cinematic feel and direct viewer attention e.g. Slow dolly in on the suspect’s face, shallow depth of field, keeping the background blurry

Audio description / scripts - guides Veo 3’s native audio generation to include dialogue, sound effects, ambient noise, or music. This is a new feature that was announced just last month e.g. Audio: rhythmic ticking of a clock, shallow breathing. He says: I didn’t do it (no subtitles).

Example - customer testimonial video using Veo 3

Now let’s take some of those principles and apply them to a real-world scenario. In this example, we’ll imagine we’re working at a pet insurance company and we’ll ask Veo3 to create a short video testimonial based on customer feedback:

Visual: A joyful pet owner cuddles their happy Tibetan terrier dog in a sunny park.

Pet Owner (smiling, speaking to camera):

"SuperPet insurance makes caring for my furry best friend so easy! Affordable plans, quick claims, and they truly love pets as much as I do!"

Visual: Dog wags tail, text overlay reads: "SuperPet: Protection Pets Love!"

Voiceover:

Visual: SuperPet logo fades in with website URL: superpet.com.

End scene.After 2 or 3 minutes, Google’s Veo3 generates a lifelike video with audio that we can use wherever we want. The text in this example isn’t quite ready for production but the video and audio are almost uncannily realistic:

How to automate the video generation process

You could take this a step further and automate the process using Veo3’s API.

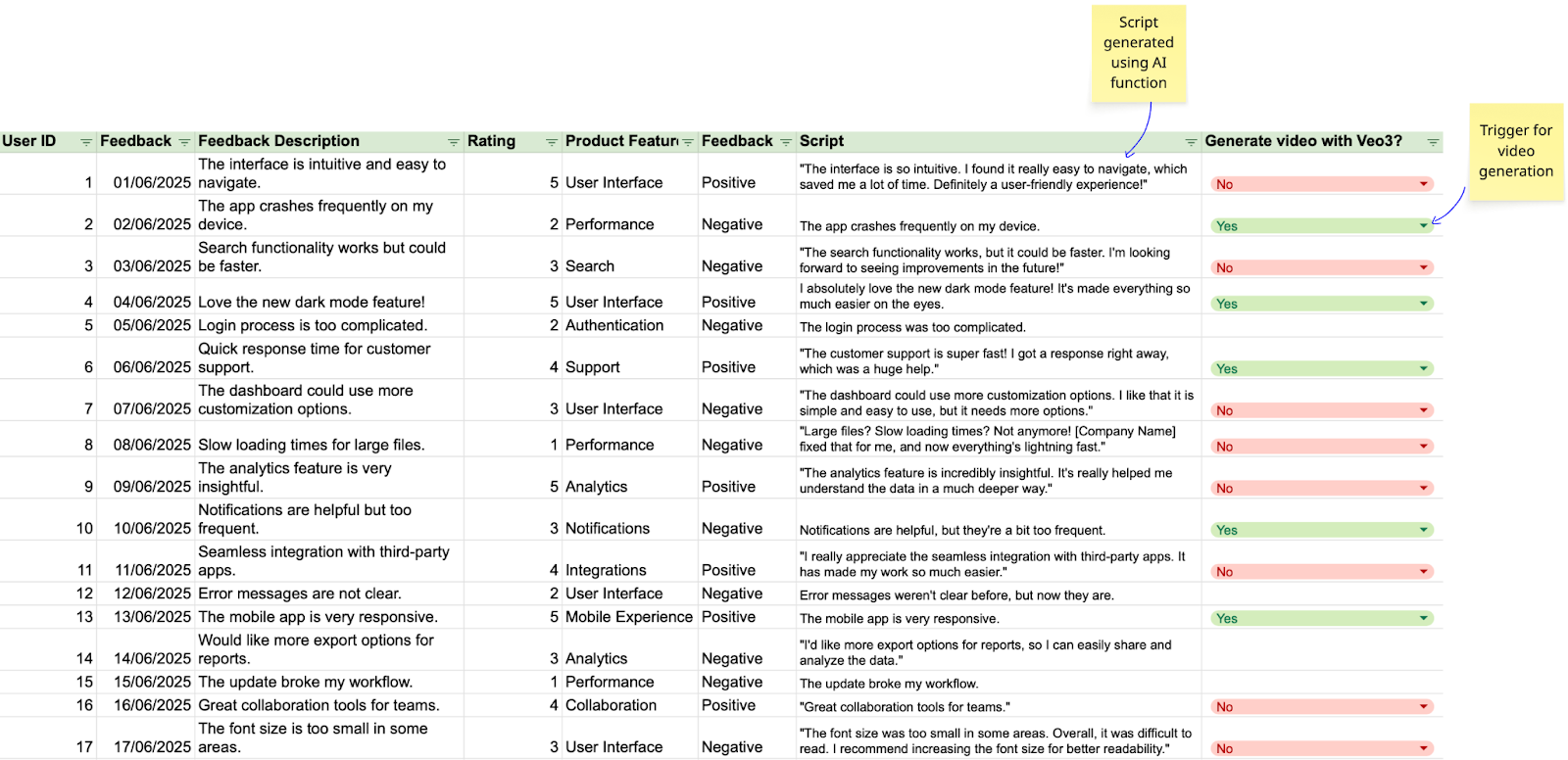

For example, you could link this to your customer feedback spreadsheet and create a video based on real world user feedback. In this Sheet, we’re using the AI function to generate a script based on real world feedback:

When paired with automation tools like n8n or Zapier, you could set up a trigger that checks the specific column for updates and if updated, it creates an automated video based on the feedback outlined.

Here’s a workflow in n8n which explains how you might be able to set this up.

4. Use Claude MCP servers and connectors to get status updates from items in Jira and Linear

Next, let’s look at MCP servers and how you can use these at work.

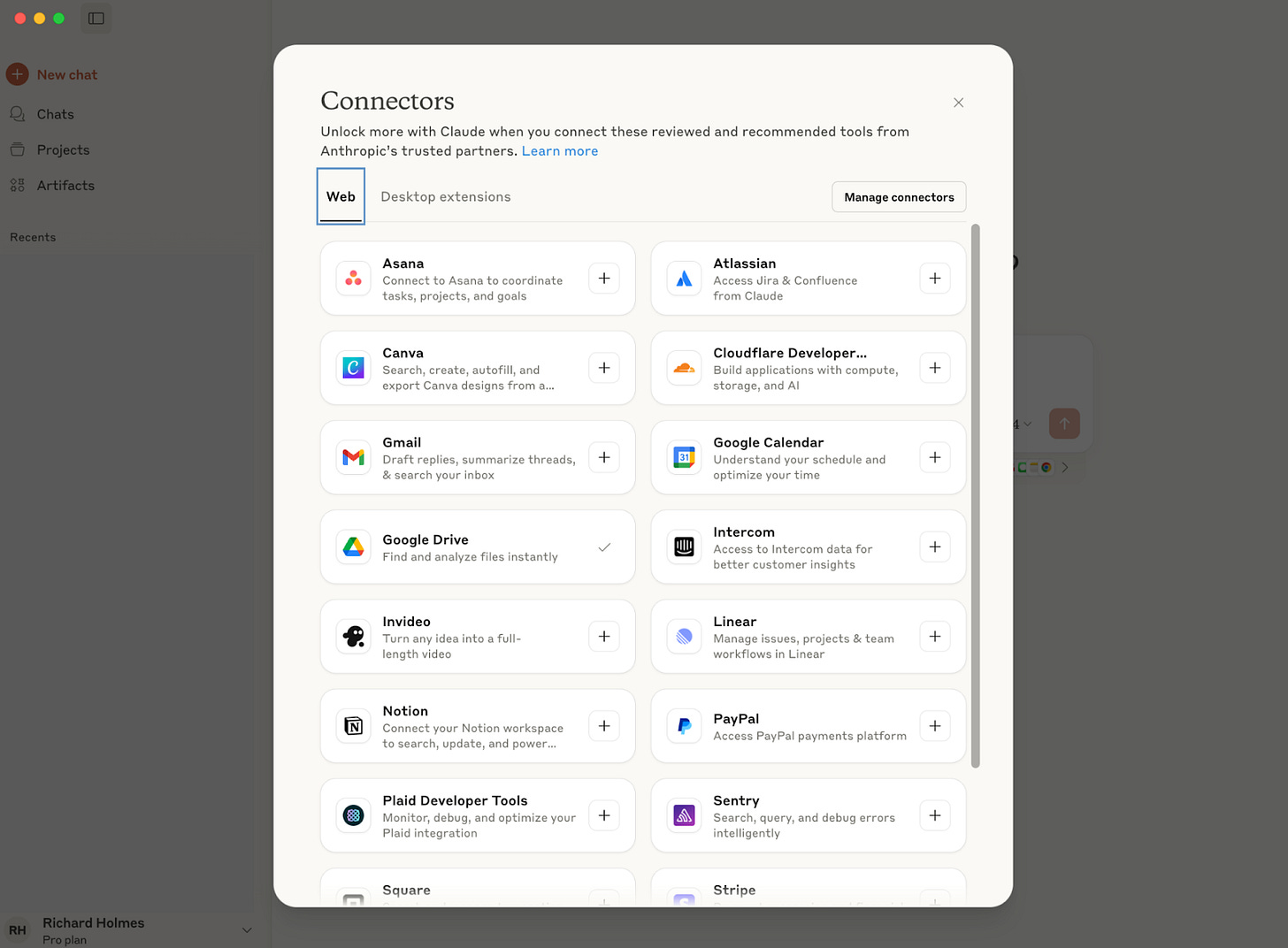

Claude is making it increasingly easier to integrate with MCP servers. Over the past 2 weeks or so they’ve released two new features that allow you to integrate with MCP servers. This includes “Connectors” and “Extensions”.

As part of this, you now have easy access to set up MCP servers from leading companies including Google, Notion, Linear, Jira, Figma and others:

Example - using Claude with MCP to get status updates from Linear or Jira

Let’s imagine I want to use Claude to check on the status of stories in development in Linear without having to log into Linear. With Claude’s new “Connectors” feature you can get MCP servers set up super quickly.