Google’s new AI Canvas: How to use it at work

🧠Knowledge Series #65: Teach yourself anything, Conduct product market analysis, Build prototypes, Create interactive process documents and more…

🔒The Knowledge Series is available for paid subscribers. Get full ongoing access to 60+ explainers and tutorials to grow your technical knowledge at work. New guides added every month.

To some extent, each of the leading AI products seem to be playing catch up with each other with little room for differentiation: Deep Research, Thinking Models, Web Search, Browser Operators… There's now barely any difference between each of the leading products as they all scramble to reach feature parity.

Last week, it was Google’s turn to play catch up with rivals like Anthropic with the release of its Claude Artifacts-style feature, Canvas, which lets users build interactive prototypes and edit documents directly in the canvas. But Google did more than just catch up - it used the release of Canvas to add a few of its own twists on the idea, too.

In this Knowledge Series tutorial, we’ll dig a little deeper into how Google Canvas works and how you can use it at work for crafting documents, building prototypes and teaching yourself how to learn new concepts at work. As well as Canvas, Google also released some extra new features for its Deep Research and a new way to bring Workspace Documents to life with its new NotebookLM-inspired audio generation feature. We’ll take a look at how you can use Gemini with those new features, too.

Coming up, how to use Google’s new AI Canvas and Deep Research at work to:

Build a self-study guide to understand how APIs work

Conduct product market analysis before adding AI into your development workflows

Build functional prototypes for testing new features

Create interactive process documents

Draft product release notes

Transform Strategy Decks in Google Slides and Deep Research reports into audio using the new Audio Overviews functionality

1.Build a self-study guide to understand how APIs work

Google Canvas’ interactive elements make it an excellent tool for teaching yourself new concepts. The Canvas allows you to select specific chunks of text and then ask Gemini to make edits directly in the canvas or ask Gemini to expand on a topic that you’re not too sure about and need further help with.

In this first example, we’ll imagine you’re just starting a new role or you’ve been promoted into a new role where you’re suddenly working with APIs a lot and you need a refresh. Using Google’s Canvas we can ask it to create a self-study guide that we’ll then transform into interactive assets we can use to teach ourselves more about specific topics. You can do this with any topic you like, of course, but for ease we’ll stick with APIs.

Here’s a prompt we can use to get started:

I've just been promoted in my company and my new role requires me to know a lot about our API products. We have both a REST API and a GraphQL API. I know how the GraphQL API works but I need a refresh on some REST API concepts. Can you generate a study guide that teaches me the essential aspects of APIs that I should know about? I work in the product team where I do a hybrid role of product management and design but I'm not an engineer. Tailor it to my level.Gemini responds with a document in Canvas entitled REST API Study Guide for Product People. The guide covers everything we need to know from the basics (What is an API?) through to specific REST-related terminology like resources and endpoints. You can grab a copy of the document it created here.

But while the document itself is pretty useful as an outline of the core topics we want to teach ourselves, the real power of Canvas is its ability to make inline edits that allow us to double down on specific areas that we’re interested in learning more about.

Ask it to clarify what JSON is

In this example, I’ve highlighted the JSON text it used in the study guide to ask it to explain what it means in a bit more detail.

Gemini responds directly in the Canvas by adding some extra contextual information to help me to understand what JSON is and how it works. This is super helpful, and the fact that you can make the edits inline in the canvas makes it a lot easier to use than having to copy and paste chunks between two documents.

Use prototypes to bring concepts to life

Canvas can also let you take things a step further by allowing you to build interactive prototypes that bring concepts to life. In this example, I highlight the text from the study guide which explains how APIs work and ask it to build a prototype in Canvas that allows me to simulate how a REST API works. After a bit of back and forth, we ultimately end up with a tool that I can use to simulate the basic workflow of a REST API. I can give it parameters to use in the request and the API will respond accordingly.

Create a quiz

If I wanted to take things a step further, I could ask Google to create a quiz in Canvas based on all of the topics outlined in the original study guide:

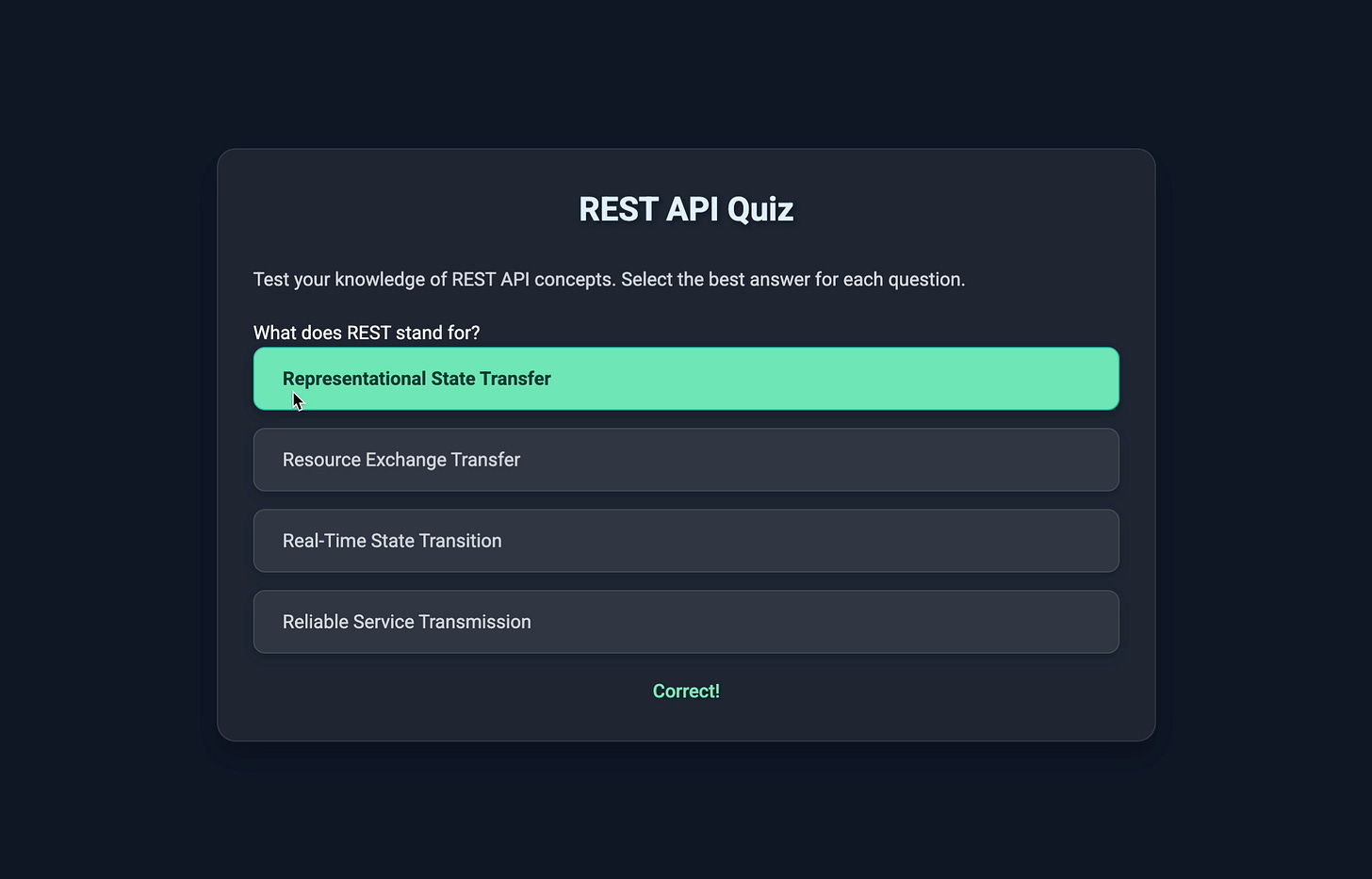

Create a basic interactive quiz in canvas that tests my knowledge based on the information included in the study guide outlineGemini responds by crafting a basic interactive quiz, with questions based on the original study guide. At the end of the quiz, you’re shown a score out of 10. If you wanted to add things like timers for each question or focus on a specific part of the study guide you could ask Gemini to rewrite the questions and try the quiz again.

2. Conduct market analysis before adding AI agents into our development process

As part of its recent updates, Deep Research now comes Gemini’s 2.0 Flash Thinking Experimental model, which enhances its reasoning and research capabilities. It allows Deep Research to generate more comprehensive and insightful reports, often in just 2-3 minutes, compared to longer times previously. Plus, Deep Research is now free for all users.

For this example we’re going to look at how we can use the latest version of Deep Research to understand how we might be able to add AI agents into our software development processes.

We’ll start with this prompt: