🧠 Knowledge Series #28: How does an integration with an LLM work?

Everything you need to know about integrating with companies like OpenAI to power your AI product features

🔒The Knowledge Series is a collection of easy to read guides designed to help you plug the gaps in your tech knowledge so that you feel more confident when chatting to colleagues. Clearly explained in plain English. One topic at a time.

If you’re a free subscriber and you’d like to upgrade to unlock them you can do so below. Or you can find out more about paid access here.

Hi product people 👋,

In this Knowledge Series we’re going to dig a little deeper into the more technical aspects of using Large Language Models (LLMs) to power AI features so that the next time you’re having a conversation with a technical colleague you’ll understand some of the AI-specific language they’re using.

With the help of some examples from Notion, Amazon and Google Maps, we’ll look at some of the most important terms you need to know about in this space including things like vector databases, prompt optimization and more.

Coming up:

What is an LLM and why are product teams integrating with them?

The basic technical components of an LLM integration

What you can do with an API integration with a Large Language Model (LLM) - real examples using OpenAI

3 examples of recent features from Notion, Amazon and Google which use LLMs to help you understand how to use them in your own product

The full list of the leading LLMs product teams can integrate with and how they compare

What is an LLM and why are product teams integrating with them?

Large language models (LLMs) are a type of neural network that is capable of ingesting strings of text and predicting what the next sequence of words might be. Chatbots like ChatGPT are a type of LLM that have been trained for the specific purpose of generating a response to a question.

We’re not going to go too deeply into how they work in this post but to give you a quick summary, LLMs work by taking a string of words and representing them as sequences of numbers which capture the meaning of a word in relation to other words.

They’re built in two key stages:

First, the training stage. This is when the model is fed billion of words so that it can learn what each of the words means and how closely related they are,

Second comes the fine tuning stage where human feedback is used to fine tune and rank a model’s responses from best to worst. This is called reinforcement learning from human feedback (RLHF).

Product teams are integrating with LLMs because they’re a fast track to adding AI features into their products. And building your own LLM from scratch is a lot of work which means integrating with a third party is often much easier.

The basic technical components of an LLM integration

When we refer to an LLM integration we’re talking about the scenario where a product team decides to use someone else’s LLM rather than build their own to power their AI features. Some companies decide to build their own LLMs from scratch. SaaS product Replit, for example, decided to do this because the data they have is uniquely relevant to their customers.

But for the purposes of this post, since we’re focused on integrations, we’ll focus mostly on the scenario where a team uses someone else’s LLM.

As we noted in a previous post on chatbots, the most popular model for powering AI features for the products we chose to look aOpenAI.

And if you’re considering which model to use for your own product, it’s important to understand the two main approaches to ‘integrating’ with LLMs.

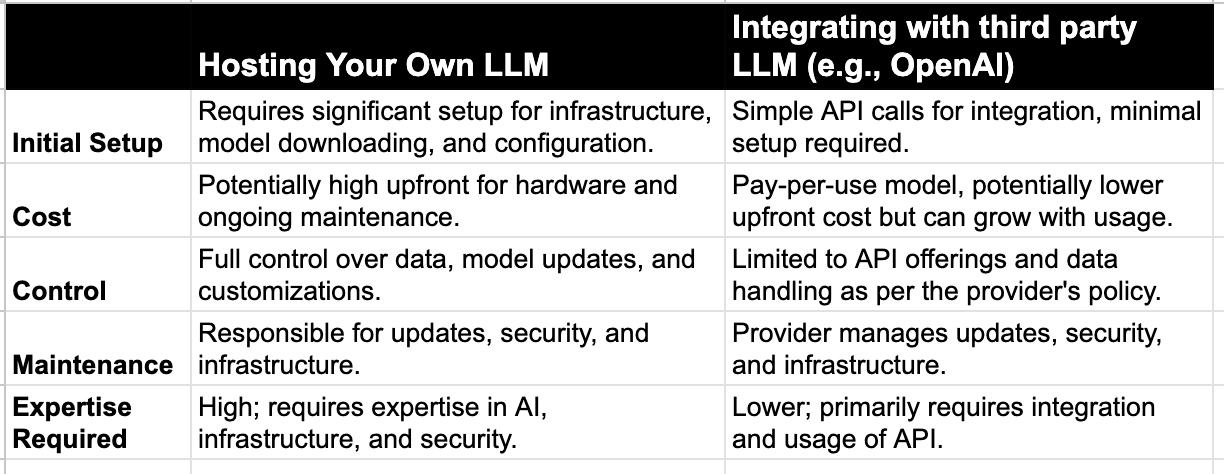

The two approaches to using LLMs for product teams

LLMs can be open-sourced or integrated via third party APIs. In the open-sourced model, this involves teams hosting their own LLM and infrastructure rather than integrating with a third party API.

This is one of the sources of contention between Elon Musk and OpenAI at the moment. Musk’s argument is if OpenAI is not open sourced, then is it really “open”? We’ll leave that one to the lawyers, but here are the core differences between the two approaches:

Now onto the more technical bits. For this Knowledge Series, we’ll focus on the second of these options: integrating with LLMs via third party APIs.

An end to end example of an LLM integration

Here’s a diagram of what an LLM integration might look like: